Fitting Correlation Functions with emcee¶

In this demo, we provide a very simple example of how to fit galaxy correlation functions with emcee. This example does not do anything with the complexity required for fitting real-world data. It does not account for measurement error, or redshift evolution or redshift-space distortions. However, it gives a taste of what is achievable.

[1]:

import emcee

import halomod

import numpy as np

import matplotlib.pyplot as plt

from scipy.stats import norm

from multiprocess import Pool

import corner

%matplotlib inline

[2]:

emcee.__version__

[2]:

'3.0.2'

[3]:

halomod.__version__

[3]:

'1.6.1.dev24+g0c844c9.d20200813'

Create Some Mock Data¶

First, let’s create a default galaxy halo model:

[4]:

model = halomod.TracerHaloModel(

z=0.2,

transfer_model='EH',

rnum=30,

rmin=0.1,

rmax=30,

hod_model='Zehavi05',

hod_params={

"M_min": 12.0,

"M_1": 12.8,

'alpha': 1.05,

'central': True

},

dr_table=0.1,

dlnk=0.1,

dlog10m=0.05

)

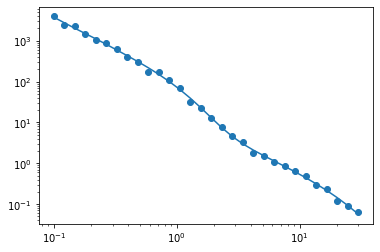

Now, let’s create some mock data with some Gaussian noise:

[5]:

np.random.seed(1234)

mock_data = model.corr_auto_tracer + np.random.normal(scale = 0.1 * np.abs(model.corr_auto_tracer))

mock_ngal = model.mean_tracer_den

[6]:

plt.plot(model.r, model.corr_auto_tracer)

plt.scatter(model.r, mock_data)

plt.xscale('log')

plt.yscale('log')

Define a likelihood¶

Now let’s define a likelihood function, based on some input model for \(\xi(r)\). The model is simply a \(\chi^2\) likelihood.

[7]:

def chi_square(model, data, sigma):

return np.sum(norm.logpdf(data, loc=model, scale=sigma))

Define an emcee-compatible likelihood function¶

Now we define a likelihood function for emcee. There is a bit more flexibility here, as this function needs to calculate priors on all input parameters, and handle exceptions as well. This means this function is rather specific to the problem at hand. We will define a very simple function, but one that is fairly general.

[8]:

fiducial_model = model.clone()

First, we define a small utility function that will take a dictionary in which keys may be dot-paths, and converts it to a nested dictionary:

[9]:

def flat_to_nested_dict(dct: dict) -> dict:

"""Convert a dct of key: value pairs into a nested dict.

Keys that have dots in them indicate nested structure.

"""

def key_to_dct(key, val, dct):

if '.' in key:

key, parts = key.split('.', maxsplit=1)

if key not in dct:

dct[key] = {}

key_to_dct(parts, val, dct[key])

else:

dct[key] = val

out = {}

for k, v in dct.items():

key_to_dct(k, v, out)

return out

So, this will do the following:

[10]:

flat_to_nested_dict(

{

'nested.key': 1,

'nested.key2': 2,

'non_nested': 3

}

)

[10]:

{'nested': {'key': 1, 'key2': 2}, 'non_nested': 3}

This will enable us to pass a list of parameter names that we want updated, which could be parameters of nested models. This means our posterior function is fairly general, and can accept any model parameters to be updated:

[11]:

def log_prob(param_values, param_names, data, model, bounds=None, derived=()):

# Pack parameters into a dict

params = dict(zip(param_names, param_values))

# Allow for simple bounded flat priors.

bounds = bounds or {}

for key, val in params.items():

bound = bounds.get(key, (-np.inf, np.inf))

if not bound[0] < val < bound[1]:

return (-np.inf,) + (None,)*len(derived)

# Update the base model with all the parameters that are being constrained.

params = flat_to_nested_dict(params)

model.update(**params)

ll = chi_square(model.corr_auto_tracer, data[0], 0.1 * np.abs(model.corr_auto_tracer))

ll += chi_square(model.mean_tracer_den, data[1], 1e-4)

if not np.isfinite(ll):

return (-np.inf, ) + (None,)*len(derived)

derived = tuple(getattr(model, d) for d in derived)

out = (ll,) + derived

return out

We can test that the log_prob function works:

[12]:

log_prob(

[12.0, 12.8, 1.05],

['hod_params.M_min', 'hod_params.M_1', 'hod_params.alpha'],

(mock_data, mock_ngal),

model,

derived=['satellite_fraction', 'mean_tracer_den']

)

[12]:

(-51.66355502557364, 0.489931978244314, 0.008754375619587638)

Notice the derived parameters: we can pass any quantity of the TracerHaloModel, and it will be stored on every iteration. Nice!

Run emcee¶

Let’s run a simple model in which we just want to fit \(\sigma_8\) and the spectral index \(n_s\). We use the popular emcee package, and pass in our log_prob model:

[31]:

backend = emcee.backends.HDFBackend("backend.h5")

backend.reset(100, 3)

blobs_dtype = [("sat_frac", float), ("tracer_den", float), ("bias_effective_tracer", float), ("corr_auto_tracer", (float, len(mock_data)))]

sampler = emcee.EnsembleSampler(

nwalkers = 100,

ndim = 3,

log_prob_fn = log_prob,

kwargs = {

'param_names': ['hod_params.M_min', 'hod_params.M_1', 'hod_params.alpha'],

'data': (mock_data, mock_ngal),

'model': model,

'derived': ['satellite_fraction', 'mean_tracer_den', 'bias_effective_tracer', 'corr_auto_tracer'],

},

pool = Pool(32),

blobs_dtype=blobs_dtype,

backend=backend

)

On the advice of the emcee documentation, we set up some initial positions of the walkers around the solution.

[32]:

initialpos = np.array([

fiducial_model.hod.params['M_min'],

fiducial_model.hod.params['M_1'],

fiducial_model.hod.params['alpha']

]) + 1e-4 * np.random.normal(size=(sampler.nwalkers, sampler.ndim))

[ ]:

sampler.run_mcmc(initialpos, nsteps=10000, progress=True);

81%|████████ | 8093/10000 [11:03:34<2:35:37, 4.90s/it]

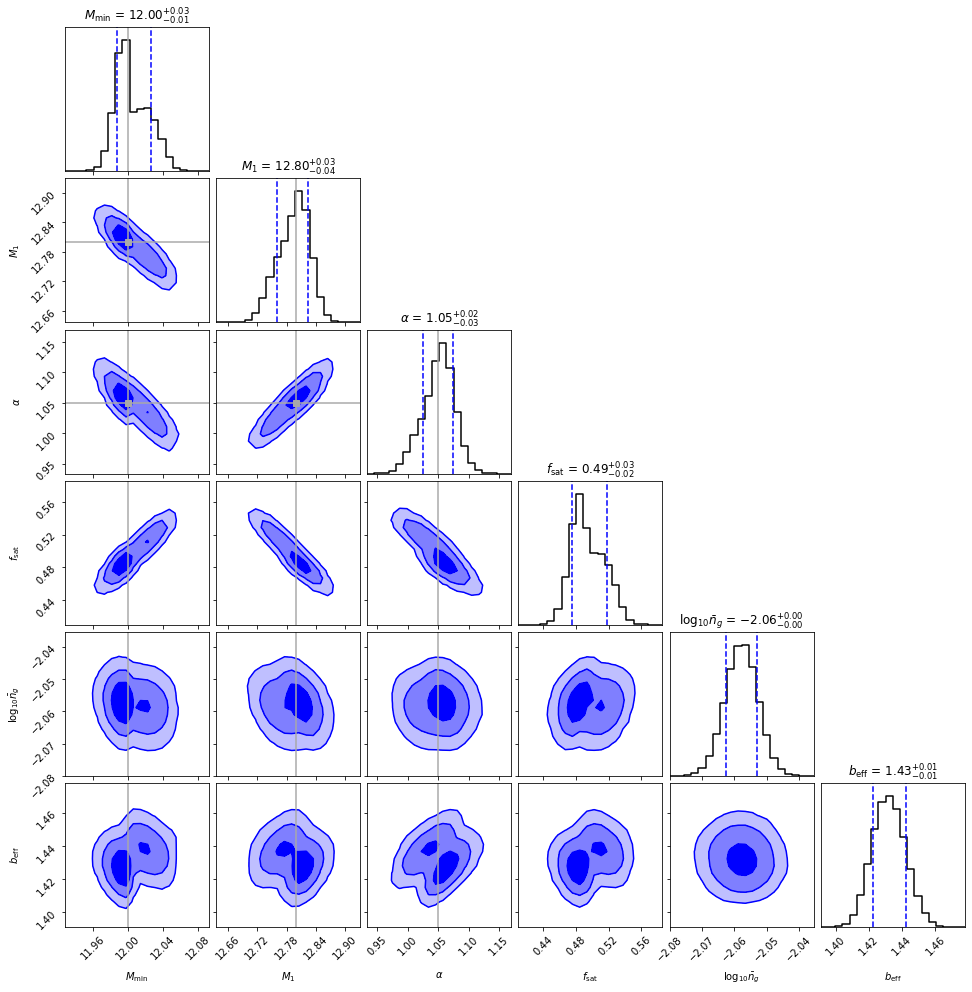

Now we can plot the posterior in a corner plot, along with the derived parameters, and the true input values:

[47]:

flatchain = sampler.get_chain(discard=500, thin=5, flat=True)

blobs = sampler.get_blobs(discard=500, thin=5, flat=True)

flatchain = np.hstack((

flatchain,

np.atleast_2d(blobs['sat_frac']).T,

np.atleast_2d(np.log10(blobs['tracer_den'])).T,

np.atleast_2d(blobs['bias_effective_tracer']).T

))

[48]:

np.save('flatchain', flatchain)

[49]:

corner.corner(

flatchain,

labels=[r'$M_{\rm min}$', '$M_1$', r'$\alpha$', r'$f_{\rm sat}$', r'$\log_{10}\bar{n}_g$',

r'$b_{\rm eff}$'],

quantiles=(0.16, 0.84),

show_titles=True,

#range=lim,

levels=(1-np.exp(-0.5),1-np.exp(-2),1-np.exp(-4)),

plot_datapoints=False,

plot_density=False,

fill_contours=True,

color="blue",

hist_kwargs={"color":"black"},

smooth=0.5,

smooth1d=0.5,

truths=[12., 12.8, 1.05, None, None, None],

truth_color='darkgray'

);

plt.savefig("default_corner.pdf")

And we’re done! The posterior contains the truth to within 1-sigma.

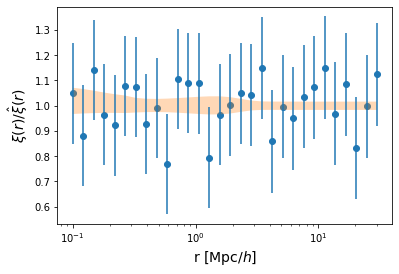

Let’s also plot the residuals:

[44]:

xi_out = sampler.get_blobs(discard=500, thin=5, flat=True)['corr_auto_tracer']

[45]:

quantiles = np.quantile(xi_out, [0.16, 0.50, 0.84], axis=0)

[46]:

plt.scatter(model.r, mock_data / quantiles[1])

plt.errorbar(model.r, mock_data / quantiles[1], yerr = 0.2*np.abs(fiducial_model.corr_auto_tracer) / quantiles[1], fmt='none')

plt.fill_between(model.r, quantiles[0] / quantiles[1], quantiles[2]/ quantiles[1], alpha=0.3)

plt.xscale('log')

plt.xlabel("r [Mpc/$h$]", fontsize=14)

plt.ylabel(r"$\xi(r) / \hat{\xi}(r)$", fontsize=14);

plt.savefig("residuals.pdf")